Welcome!

Assistive AV for:

Assistive solutions for impaired access are crucial for creating an inclusive and sustainable society. These technologies, such as audio-visual aids and adaptive infrastructure, ensure that individuals with impairments can navigate and participate fully in public life. By incorporating eco-friendly, energy-efficient designs, these solutions not only enhance accessibility but also support sustainability goals.

Active Projects

Projects

Audio-Visual-Based Assistive Navigation for the Visually Impaired Persons

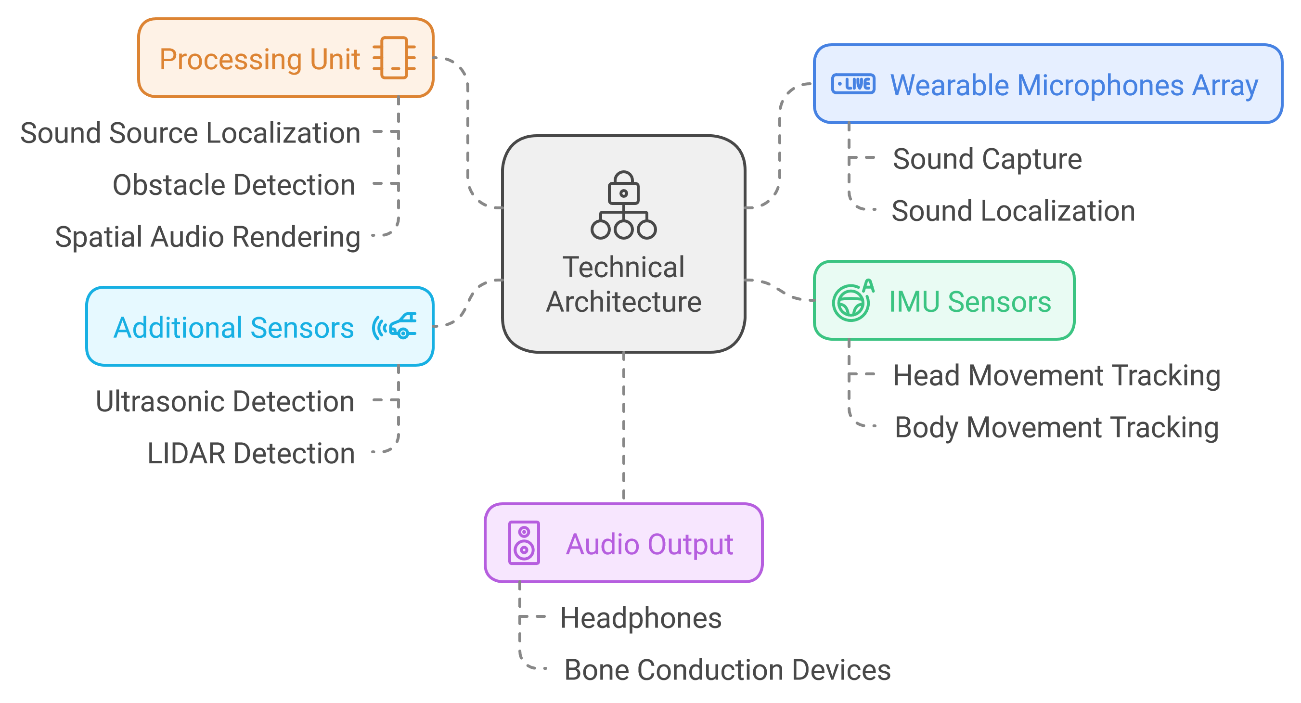

The goal of this project is to develop a wearable, audio-visual sensor-based assistive navigation and wayfinding system that utilizes real-time audio-visual feedback to assist visually impaired individuals in navigating complex, noisy environments, particularly those with high traffic density. The system will enable users to localize sound-producing objects, detect obstacles, and receive spatial audio cues for situational awareness. The system will be integrated with 3D camera technology and object classification algorithms, designed to help visually impaired individuals navigate supermarket environments independently. The device will guide users to specific sections, identify products on shelves, and offer real-time assistance in selecting items. This technology has the potential to empower visually impaired individuals by enhancing their independence and safety.

Target Audience

Primary: Visually impaired individuals seeking an independent visit, travel and shopping experiences.

Secondary: Supermarket chains and retail stores aiming to provide accessibility solutions for customers with disabilities and visual impairments.

Objectives

Real-Time Sound Localization: users to accurately locate sound-producing objects such as vehicles, pedestrians, and street activities.

Detection and Avoidance: Provide immediate warnings of nearby obstacles, including those that may not produce sound (e.g., curbs, walls, tables and chairs on footpaths etc.).

Spatial Audio Feedback: Convey spatial orientation and distances of objects through headphones (earphones), allowing users to perceive their surroundings.

Alert Mechanism for Danger: Real-time alerts for fast-approaching hazards (especially non-sounding objects such as cyclists etc.), ensuring proactive avoidance.

Enable Independent Navigation: Provide users with clear, real-time guidance to navigate supermarket aisles and sections.

Intuitive Feedback: Utilize spatial audio and voice commands to communicate directions and product details.

Enhance Safety and User Experience: Minimize obstacles and improve accessibility, ensuring a safe shopping experience.

Seamless Usability: Design a user-friendly, unobtrusive wearable device that can operate in noisy and dynamic urban environments.

Technical Architecture

Impact

This project offers a pioneering approach to assist visually impaired individuals, addressing challenges in crowded,

noisy environments by leveraging real-time acoustic data processing. The system aims to increase autonomy and safety by

providing a navigational tool that relies on natural sound cues and spatial audio, enhancing the user's environmental awareness.

This system's long-term goal is to become an affordable and scalable tool that significantly improves the quality of life and safety of the visually impaired community.

Enhanced Independence:Enables visually impaired individuals to navigate supermarkets independently.

Improved Accessibility:Provides retailers with a unique solution to support inclusivity and improve customer experience.

Real-World Skill Enhancement:Users become more confident in navigating and understanding spatial layouts in other settings as well.

Alert Mechanism for Danger: Real-time alerts for fast-approaching hazards (especially non-sounding objects such as cyclists etc.), ensuring proactive avoidance.

Scalability:The platform can be expanded to other public venues like malls, airports, and libraries, creating broader impact.

Sign Language Detection, Interpretation, and Conversion System

Communication is a fundamental aspect of human interaction, yet individuals with speech

impairments or mutism often face significant barriers when engaging with others. For those who rely on sign language

as their primary mode of communication, the challenge lies in interacting with people who do not understand this

visual language. Similarly, muted individuals may struggle to comprehend spoken language in real-time without

additional support.

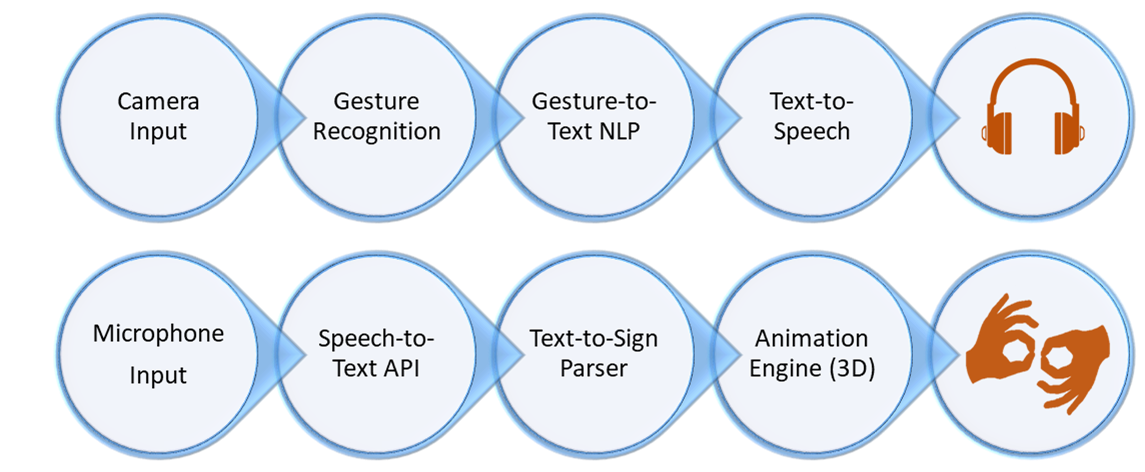

To address these issues, we propose a Sign Language Detection and Interpretation System, which facilitates seamless

two-way communication. The system leverages Artificial Intelligence (AI), Machine Learning (ML), Natural Language

Processing (NLP), and 3D Animation Technologies to translate sign language gestures into spoken language and vice

versa. By interpreting sign language through cameras and wearable sensors and rendering spoken language into

animated sign language, this system aims to break down communication barriers and promote inclusivity.

Flow Diagram: System Architecture

Usages of the System

The proposed system has a wide range of applications across various sectors, making it a

versatile tool for improving accessibility and fostering communication

Healthcare: Enables seamless communication between doctors, nurses, and patients who are muted or rely on sign

language. Facilitates effective diagnosis and treatment discussions in emergency or routine medical care.

Education: Helps teachers communicate with students who use sign language, ensuring they can participate in

mainstream classrooms. Assists muted students in understanding lectures delivered in spoken language.

Workplace: Enhances inclusivity by allowing muted employees to engage in meetings and discussions with colleagues who do not understand sign language.

Simplifies workplace communication, fostering a more collaborative environment.

Public Services: Improves access to government services for muted individuals by facilitating real-time communication at help desks and customer service counters.

Bridges communication gaps in public transportation, law enforcement, and other civic interactions.

Social Interactions: Enables muted individuals to engage in conversations at social gatherings, events, and everyday interactions with the general population.

Retail and Hospitality: Assists muted individuals in communicating with service staff in stores, restaurants, and hotels, enhancing customer experiences.

Provides businesses with a tool to ensure accessibility compliance and inclusivity.

Emergency Services: Supports effective communication during emergencies, allowing muted individuals to seek help and convey critical information.

Entertainment and Media: Facilitates the translation of audio content (e.g., podcasts, TV shows) into animated sign language for muted audiences.

Makes entertainment more accessible by integrating real-time interpretation of spoken dialogue into sign language.